Metadata is something that goes on behind the scenes and is rarely of concern to either author or readers of scientific articles. Here I tell a story where it has rather greater exposure. For journals in science and chemistry, each article published has a corresponding metadata record, associated with the persistent identifier of the article and known to most as its DOI. The metadata contains information about the article such as its authors and their affiliations, the title of the article and its abstract, and is submitted to/registered with Crossref – an organisation set up in 1999 on behalf of publishers, libraries, research institutions and funders. Relatively recent additions to Crossref metadata are the citations included in the article, so-called Open Citations. Doing so has helped to create the new area of article metrics, used by e.g. Altmetrics or Dimensions to help identify the impacts that science publications have. Basically, if one article is cited by another, it is making an impact. Many citations of a given article by other articles means a larger impact. Most researchers love to have a high – and of course positive – impact and perhaps for better or worse, academic careers to some extent depend on such impacts.

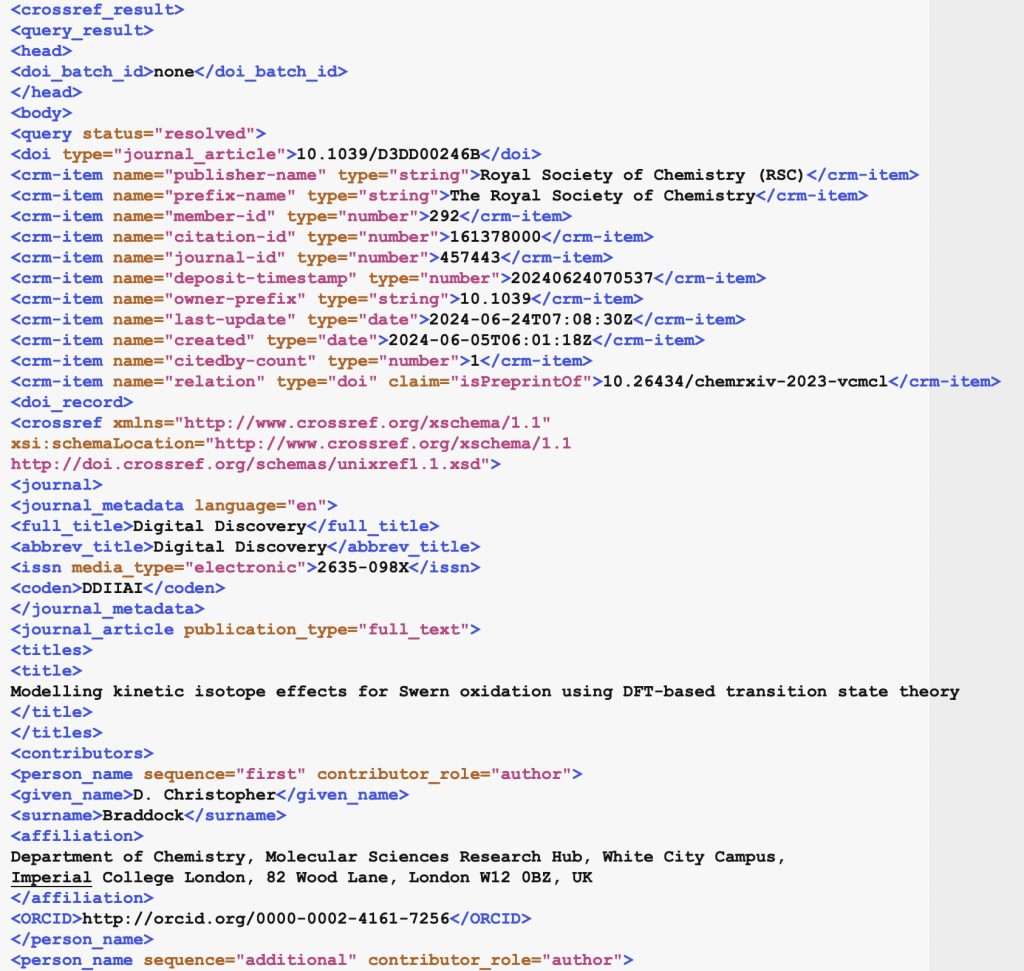

With that as the background, I now move to a recent article of ours.[1] The metadata record for this article can be obtained using the query:

https://api.crossref.org/works/10.1039/D3DD00246B/transform/application/vnd.crossref.unixsd+xml (retrieved 14/07/2024).‡

This has 63 citations in the body of the article, with the unusual but pertinent aspect that 30 of these relate not to other articles or to web links, but to data – specifically FAIR data. We even comment on this in our conclusions – “The citations noted here are included in the metadata record for the article, which is registered with Crossref, albeit with one significant current limitation in that there is currently no formal declaration of these citations as specific pointers to a FAIR data collection.” This statement was made on the premise that the article citations would show a 1:1 match with the metadata entries (which they do, see below. But see also here[2]).

Before I take a look at this, I note that CrossRef metadata does not treat all citations equally. The traditional form of citation appears as such for reference 25 (there are 29 of these in total).

<citation key="D3DD00246B/cit25/1"> <journal_title>J. Chem. Phys.</journal_title> <author>Scalmani</author> <cYear>2010</cYear> <first_page>114110</first_page> <doi>10.1063/1.3359469</doi> </citation>

A variation of this is used for variations on journal articles such as preprints, where an “unstructured” component is added to the citation. This is often used as a short commentary added by the authors relating to the citation – in this case indicating that it relates to a preprint of the article itself. The term “unstructured” also means that the commentary may not have any predictable patterns, or use any terms from a specified dictionary, and may need the special expertise of a human to process it. In other words, “unstructured” components may not be “machine friendly”. Or that a machine may have to work quite hard to work out what to do about the commentary.

<citation key="D3DD00246B/cit10/1"> <volume_title>ChemRxiv</volume_title> <author>Braddock</author> <cYear>2024</cYear> <doi>10.26434/chemrxiv-2023-vcmcl</doi> <unstructured_citation>For a preprint, see, D. C.Braddock, S.Lee and H. S.Rzepa, SWERN Oxidation. transition structure Theory is OK, ChemRxiv, 2023, preprint, 10.26434/chemrxiv-2023-vcmcl </unstructured_citation> </citation>

A third variation on this is present, but this time apparently relating to data itself. Note again the use of an “unstructured” commentary, which effectively adds the information that the citation might “apparently” relate to data. To be fair, the volume title also does that, but this should not be its job!

<citation key="D3DD00246B/cit19/1"> <volume_title>Imperial College Research Data Repository</volume_title> <author>Braddock</author> <cYear>2023</cYear> <doi>10.14469/hpc/13108</doi> <unstructured_citation> D. C.Braddock , H. S.Rzepa and S.Lee, Imperial College Research Data Repository, 2023, 10.14469/hpc/13108</unstructured_citation> </citation>

Why might this be important? Well, the mantra nowadays is that information has to be processable not only by humans but also by machines undertaking learning or “artificial intelligence. Such ML/AI is at least in part about finding predictable patterns in data, and unstructured citations imply a certain lack of predictability! A machine can “read” a journal article and that should also be possible for the data on which inferences reported in the article are made. So that data has to be accessible in the first instance and then interoperable and re-useable in the second instance. These attributes are known as FAIR. So it would be great if the metadata for the article could indicate to a machine when associated data might be available – and even better to suggest that this data might have attributes of FAIR.

So we now understand that there does need to be a formal agreed way of specifically expressing a data citation in the CrossRef metadata, rather than just carrying an unstructured commentary in the citation. The good news is that such is on the way! A public discussion document requests comments by August 15th, 2024 and introduces two new Crossref additions to the metadata, which are interpreted below in terms of the article we are discussing.

-

<citation type=”dataset” key="D3DD00246B/cit19/1"> <volume_title>Imperial College Research Data Repository</volume_title> <author>Braddock</author> <cYear>2023</cYear> <doi>10.14469/hpc/13108</doi> </citation>

A more formal statement is also now added, and I quote Crossref’s reasons for its inclusion “we’d like to support several types of free-text statements in our metadata records as we’ve had feedback that they can be useful for downstream metadata users who are able to parse out and refine chunks of text in ways that may be useful. The statements are also useful for re-use in some situations.” In some ways, it replaces the unstructured citation from the example above, but now using a controlled dictionary term to specifically relate to data.

-

<statement type=”data availability”>Data Availability and Discovery Statement</statement>

Let us now see how all this is handled for the article we are discussing.[1]

- The data itself, found as a collection with its own metadata record[3] can and does cite the article[1].

- The Crossref metadata record for the article as of 17.07.2024 has 38 entries which include an <unstructured_citation>, including 30 relating to data (which are currently inferred by a human).

- If the metadata changes noted above are implemented, the 30 data citations will be clearly identified as such, as in the example shown in item 1 above, and no human inference would be needed.

The CrossRef public discussion document will remain available for another four weeks or so – meanwhile, public comments are requested! Once these enhancements have been implemented, we hope that the article metadata record we are analysing here can in turn be updated‡ to reflect the FAIR data richness of the article. And then perhaps Altmetrics or Dimensions can start producing metrics relating to the impact of cited data. Watch this space!

‡One difference between the article itself and its metadata record is that the former does not change {unless a corrigendum is issued} – it is a so-called Version-of-Record or VOR, whereas the metadata record itself can be responsibly updated when deemed necessary. So it is important to note the date associated with any given version of a metadata record.

References

- D.C. Braddock, S. Lee, and H.S. Rzepa, "Modelling kinetic isotope effects for Swern oxidation using DFT-based transition state theory", Digital Discovery, vol. 3, pp. 1496-1508, 2024. https://doi.org/10.1039/d3dd00246b

- L. Besançon, G. Cabanac, C. Labbé, and A. Magazinov, "Sneaked references: Cooked reference metadata inflate citation counts", arXiv, 2023. https://doi.org/10.48550/arxiv.2310.02192

- H. Rzepa, "Modelling kinetic isotope effects for SWERN Oxidation. DFT-based transition structure Theory is OK.", 2023. https://doi.org/10.14469/hpc/13058